This is a quick walk through of how to set up Docker Desktop on Windows 10 Pro, with some of the common pitfalls, and a demo stack you can use to verify that your Docker installation can properly access the volumes created on Windows drives.

To get started, you need to download the Docker Desktop CE from docker:

Full documentation on how to install can be found here:

https://docs.docker.com/docker-for-windows/install/

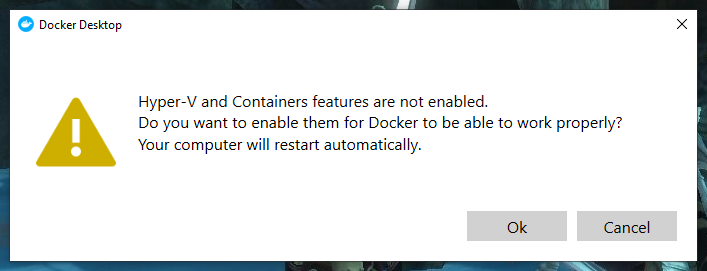

When you run the installation, you may be prompted with the following:

You will need to accept this and allow the computer to restart.

It is possible however that virtualization will be off at the BIOS level for your computer, in which case Docker will not be able to run. To correct that, you will need to go into the advanced BIOS settings and enable virtualization then allow the computer to restart once more.

You can verify that Docker is installed and running properly with this fun little doodle that they put out for Halloween today:

docker run -it --rm docker/doodle:halloween2019

Now the really fun part - setting up windows volumes that can be mounted inside swarm services!

The first thing we need to do, is to tell Docker Desktop that is may access the drive(s) on the Windows machine. Open Docker Desktop, and go to the Settings. Select the Shared Drives menu item and then check the box for the drives that you want to grant access to.

When you click 'Apply' you will be prompted to enter the user that Docker will be acting as to access the drives. This can be one of the most complicated pieces, so do NOT feel badly if you get stuck on this. The most direct solution I have found is to set up a local user account for the machine that is specifically for Docker to access the drive you have checked and give that account Administrator privileges. I can't speak to the security of doing so in a public network environment, so please do your own due diligence when setting up users and credentials and access. If you've done everything else in this walkthrough and it's still not able to see your data, I would put money on it being a user access issue and focus on that.

While you're in the Docker Settings, I recommend switching the builder to use buildkit. To do so, you need to add it to your daemon configuration like so:

Next, create the folder that you want to be able to mount into your docker containers.

To keep things 'tidy' we'll make a C:\DockerDrives folder and then create the actual folder for this test within that - testcomposervol.

And so that we have it for testing, we'll create an empty file within that folder called danaslocalfile.txt

C:\DockerDrives\testcomposervol\ C:\DockerDrives\testcomposervol\danaslocalfile.txt

Since we're wanting to be able to use this with a Docker Swarm, let's next look at the stack we're going to deploy to test our volumes. In this, we're going to use a single php image and when that image is used to create a container (and thereby a service). We will mount the same windows folder to the container in two different ways, so that you can verify that both methods are working locally.

Copy the following as docker-compose-sample.yml

version: "3.7"

services:

php:

image: php:7

deploy:

replicas: 1

volumes:

- testvol:/var/www/html/test/

- composervol:/var/www/html/test2/

command: ['bin/sh','-c',"sleep infinity"]

networks:

- net

networks:

net:

volumes:

composervol:

external: true

testvol:

driver_opts:

type: "none"

o: "bind"

device: "/host_mnt/c/DockerDrives/testcomposervol"

Note that composervol is set to be external to the stack -- here is the command to create that volume, assuming you're using the same paths that I have specified in this post.

docker volume create composervol --opt type=none --opt device=/host_mnt/c/DockerDrives/testcomposervol --opt o=bind

At this point we have everything we need to have a valid running stack.

Assuming you have not already done so, now is the time to initialize the swarm on the machine

docker swarm init(You can remove this when you are done by using the docker swarm leave --force command)

Now, deploy the stack from the directory where you created your docker-compose-sample.yml file:

docker stack deploy -c docker-compose-sample.yml vm

It will create the necessary network and service, as well as the volume testvol that was defined locally in the file. It will also have access to the composervol that we created manually, and should have both mounted inside of the php containers as /var/www/html/test and /var/www/html/test2

You should be able to see that the services are up, the folders are present, and the test file we created before we deployed the stack is present within the test folder(s).

You can further test your volume integration by creating a file within the container's test or test2/ folder and seeing it appear in the alternate folder and in your C:\DockerDrives\testcomposervol\ folder.

Handy commands for debugging your service if it fails to start:

docker stack ps vm --no-trunc

and

docker service logs vm_php -f

If you've done all of this and you're still getting mount errors, I suggest going back and looking at the user permissions one more time.

When you're finished, don't forget to take your stack down:

docker stack rm vmYou can choose to leave your swarm running, or remove it with the leave --force command shown previously. It will remain running on your machine until you explicitly remove it.

As a reference point, if you're having trouble determining the proper device path for your folder, you can try running a docker alpine image with the folder mounted a the command line using traditional path syntax, then inspect the container to see how docker interpreted that local path, like so:

docker run -v c:/DockerDrives/testcomposervol:/data alpine ls /data

That command should spit out the directory listing showing the test file we created inside the folder. (Note: I did not use the --rm toggle so the container is still present on my system and available for inspection.)

Inspecting the container reveals the docker interpreted path - we're looking specifically for the 'source' element:

Good luck and Happy Halloween!

No comments:

Post a Comment